Introduction: The Growing Concern Around AI-Generated Content

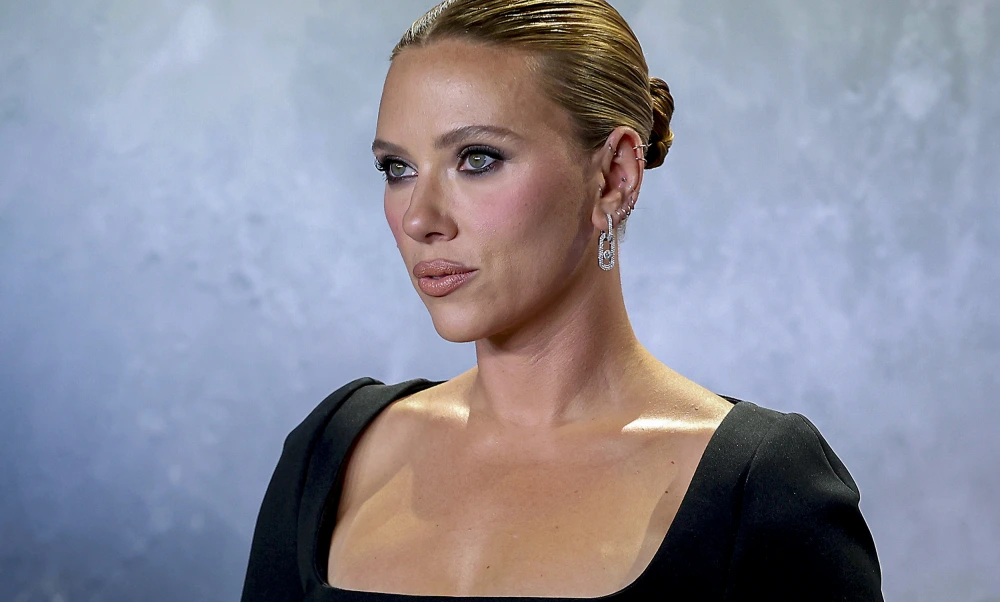

In an era where technology continues to blur the lines between reality and fiction, Scarlett Johansson has emerged as a vocal advocate for stricter AI safety laws. Her call to action comes in response to a disturbing incident involving a viral fake video that falsely depicted celebrities—including herself—engaging in heated arguments over Kanye West’s (now known as Ye) controversial antisemitic remarks.

This alarming misuse of artificial intelligence highlights the urgent need for regulation and accountability in the creation and distribution of deepfake content. As someone deeply invested in both entertainment and ethical discussions surrounding technology, I find this moment pivotal—not only for celebrities like Johansson but also for society at large. In this guide, we’ll explore the details of the viral video, Scarlett’s stance on AI safety, and why regulating this rapidly advancing technology is critical.

The Viral Fake Video That Sparked Controversy

The controversy began when a fabricated video surfaced online, purportedly showing several high-profile celebrities confronting Ye about his antisemitic comments. Using sophisticated AI tools, the creators manipulated audio and visuals to make it appear as though these interactions were real. Among those featured was Scarlett Johansson, whose likeness and voice were convincingly replicated without her consent.

For viewers unaware of the video’s origins, the clip seemed authentic, sparking outrage and confusion across social media platforms. While some praised the “celebrities” for taking a stand against hate speech, others quickly realized the footage was entirely fabricated—a product of advanced deepfake technology designed to deceive.

Why This Incident Is Particularly Troubling

- Misinformation Amplified: Deepfakes can spread false narratives, sowing division and undermining trust in legitimate information sources.

- Violation of Consent: Celebrities—and everyday individuals—are vulnerable to having their identities stolen and misused without permission.

- Potential Harm to Marginalized Groups: By weaponizing public figures’ images to address sensitive topics like antisemitism, bad actors risk trivializing serious issues or exacerbating tensions.

Scarlett Johansson’s Call for Stricter AI Regulations

Scarlett Johansson, no stranger to the dangers of digital manipulation, has been outspoken about the ethical implications of AI-generated content. Having previously addressed concerns about unauthorized use of her image in other contexts, she took to social media to condemn the viral video and demand legislative action.

In her statement, Johansson emphasized the following points:

1. AI Must Be Held Accountable

“We are witnessing the unchecked proliferation of technologies capable of fabricating reality,” Johansson wrote. “Without proper safeguards, we risk living in a world where truth becomes indistinguishable from lies.”

She called for governments and tech companies to collaborate on establishing clear guidelines for the development and deployment of AI tools, particularly those used to create deepfakes.

2. Protection for Public Figures and Private Citizens Alike

While celebrities often bear the brunt of such abuses due to their visibility, Johansson stressed that ordinary people are equally at risk. “This isn’t just about protecting famous faces—it’s about preserving everyone’s right to control how their likeness is used,” she said.

3. Education and Awareness Are Key

Johansson urged the public to become more discerning consumers of online content. She encouraged people to question the authenticity of viral videos and seek out credible sources before reacting or sharing potentially harmful material.

The Broader Implications of Unregulated AI Technology

The rise of deepfakes raises significant questions about privacy, security, and freedom of expression. If left unchecked, these technologies could have devastating consequences not only for individuals but also for democracy itself. Here’s why this issue demands immediate attention:

1. Erosion of Trust in Media

As deepfakes become increasingly convincing, distinguishing fact from fiction grows harder. This erosion of trust threatens journalism, politics, and interpersonal relationships alike.

2. Weaponization Against Vulnerable Communities

Deepfakes can be used to target marginalized groups by spreading hateful propaganda or discrediting activists fighting for justice. The recent video involving Ye’s antisemitism underscores how easily this technology can exploit existing prejudices.

3. Legal Gray Areas

Current laws struggle to keep pace with technological advancements. Many jurisdictions lack comprehensive frameworks to address the misuse of AI, leaving victims with limited recourse.

What Can Be Done to Combat the Threat of Deepfakes?

Addressing the challenges posed by AI-generated content requires a multi-faceted approach. Below are some actionable steps stakeholders can take to mitigate harm:

1. Implement Stronger Legislation

Governments must enact laws that hold creators and distributors of malicious deepfakes accountable. These regulations should include penalties for non-consensual use of someone’s likeness and mechanisms for reporting abuse.

2. Develop Detection Tools

Tech companies should invest in AI-powered solutions capable of identifying and flagging deepfake content. Social media platforms, in particular, play a crucial role in preventing the spread of misinformation.

3. Promote Digital Literacy

Educational initiatives aimed at teaching critical thinking skills can empower individuals to recognize and resist manipulative content. Schools, community organizations, and online resources all have a part to play in fostering digital literacy.

4. Encourage Ethical Innovation

Rather than stifling innovation, regulators and developers should work together to ensure AI is used responsibly. Establishing industry standards and ethical guidelines can help steer progress toward positive outcomes.

Lessons We Can Learn from This Incident

Scarlett Johansson’s response to the viral video offers valuable insights into navigating the complex intersection of technology, ethics, and human rights:

1. Advocacy Starts with Awareness

By speaking out, Johansson has brought much-needed attention to the dangers of unregulated AI. Her courage serves as a reminder that silence in the face of injustice only perpetuates harm.

2. Collaboration Is Essential

Tackling the deepfake crisis requires cooperation among policymakers, tech leaders, and the general public. No single entity can solve this problem alone.

3. Empathy Matters

Behind every manipulated image or video is a real person whose dignity and autonomy may be compromised. Approaching this issue with empathy ensures that solutions prioritize humanity over profit or convenience.

Final Thoughts

The viral fake video targeting Scarlett Johansson and other celebrities is a stark reminder of the power—and peril—of AI-generated content. As technology continues to evolve, so too must our commitment to safeguarding truth, privacy, and human dignity.

Scarlett’s impassioned plea for AI safety laws underscores the importance of proactive measures to prevent future abuses. Whether through legislation, education, or innovation, we all have a role to play in shaping a safer digital landscape.

So, what will you do to contribute to this effort? Will you educate yourself and others about the risks of deepfakes? Advocate for stronger protections? Or simply pause to verify the authenticity of viral content before hitting “share”? Every action counts in the fight against misinformation.

And now, I challenge you: How do you think society should address the growing threat of AI-generated deception? Share your thoughts—I’d love to hear them!